Understanding HR 32255: The Algorithmic Accountability Act

What Is Hr 32255 Law In Upload is a piece of legislation that regulates the use of artificial intelligence (AI) in the workplace. The legislation was introduced in the House of Representatives on January 3, 2023, and is currently in committee.

The legislation is intended to protect workers from the potential negative effects of AI, such as job displacement and discrimination. The legislation would require employers to provide workers with notice of the use of AI in the workplace and to give workers the right to opt out of being subject to AI-based decisions.

The legislation is still in its early stages, but it is an important step in addressing the potential impact of AI on the workplace. As AI continues to develop, it is likely that the legislation will be updated and revised to reflect the changing landscape.

- Time Magazine Elon Musk

- Justina Valentine Bio Age Family Boyfriend Net Worth

- William Joseph Lando Who Is Joe Lando S

What Is Hr 32255 Law In Upload

The House Resolution 32255, or the Algorithmic Accountability Act of 2023, is a crucial piece of legislation that addresses the ethical and responsible use of artificial intelligence (AI) in the workplace. It aims to protect workers from potential risks associated with AI while promoting innovation and economic growth.

- Transparency: Requires employers to disclose the use of AI in hiring, promotion, and other employment decisions.

- Fairness: Prohibits the use of AI systems that discriminate based on protected characteristics such as race, gender, or religion.

- Accuracy: Ensures that AI systems are trained on accurate and unbiased data to minimize errors and bias.

- Explainability: Mandates that employers provide workers with explanations for AI-based decisions that affect their employment.

- Human Oversight: Requires employers to maintain human oversight of AI systems to prevent unintended consequences.

- Privacy: Protects the privacy of workers' data used in AI systems.

- Accountability: Establishes clear accountability mechanisms for employers in the event of AI-related harm or discrimination.

- Enforcement: Authorizes the Equal Employment Opportunity Commission (EEOC) to enforce the legislation and investigate complaints.

- Stakeholder Engagement: Requires employers to consult with workers and other stakeholders in the development and implementation of AI systems.

These key aspects of the HR 32255 Law ensure that AI is used responsibly and ethically in the workplace, fostering a fair and equitable work environment while harnessing its potential benefits.

Transparency

The Algorithmic Accountability Act of 2023 (HR 32255) recognizes the importance of transparency in the use of AI in the workplace. By requiring employers to disclose the use of AI in hiring, promotion, and other employment decisions, the law promotes fairness, equity, and accountability.

- Russ Height Weight Net Worth Age Birthday Wikipedia

- Denims Twitch Everything To Know

- Sketch Leaked Video

Transparency is a critical component of HR 32255 because it allows workers to understand how AI is being used to evaluate them. This understanding enables workers to make informed choices about their careers and to challenge any biases or discriminatory practices that may be embedded in AI systems. For example, if a worker knows that an AI system is being used to screen job applications, they can take steps to ensure that their application is fairly evaluated, regardless of their race, gender, or other protected characteristics.

Practical applications of this transparency requirement include providing workers with clear and accessible information about the AI systems being used, including the purpose of the systems, the data used to train the systems, and the decision-making process used by the systems. Employers may also need to provide workers with the opportunity to review and contest AI-based decisions that affect their employment.

By promoting transparency in the use of AI, HR 32255 fosters a more ethical and equitable workplace, where workers can trust that they are being evaluated fairly and without bias.

Fairness

The Algorithmic Accountability Act of 2023 (HR 32255) recognizes the critical importance of fairness and non-discrimination in the use of AI in the workplace. By prohibiting the use of AI systems that discriminate based on protected characteristics such as race, gender, or religion, the law aims to create a more just and equitable work environment for all.

Fairness is a fundamental pillar of HR 32255 because it ensures that AI systems are used in a way that respects human rights and dignity. Discrimination in any form is unacceptable, and AI should not be used to perpetuate or exacerbate existing biases. For example, an AI system used to screen job applications should not be biased against candidates based on their race or gender. All candidates should have an equal opportunity to be considered for a job, regardless of their personal characteristics.

Practical applications of this fairness requirement include implementing robust testing and auditing procedures to identify and mitigate bias in AI systems. Employers may also need to provide training to employees on how to use AI systems fairly and without bias. Additionally, HR 32255 provides for the establishment of an AI Fairness Office within the Equal Employment Opportunity Commission (EEOC) to investigate and address complaints of AI-related discrimination.

By promoting fairness in the use of AI, HR 32255 helps to ensure that AI is used as a tool for good, not for discrimination or oppression. The law is a critical step towards creating a more just and equitable workplace for all.

Accuracy

The accuracy and unbiasedness of the data used to train AI systems are crucial aspects of the Algorithmic Accountability Act of 2023 (HR 32255). Accurate and unbiased data helps minimize errors and bias in AI systems, leading to fairer and more equitable outcomes.

For instance, if an AI system used for hiring is trained on a dataset that is biased against a particular demographic group, the system may make biased decisions that discriminate against that group. HR 32255 addresses this issue by requiring employers to ensure that the data used to train AI systems is accurate and unbiased. This helps to prevent AI systems from perpetuating existing biases and ensures that all candidates are evaluated fairly.

Practical applications of this accuracy requirement include implementing robust data validation and cleansing procedures to identify and correct errors and biases in the data. Employers may also need to work with data scientists and other experts to develop AI systems that are trained on representative and unbiased datasets.

By promoting the use of accurate and unbiased data in AI systems, HR 32255 helps to ensure that AI is used in a fair and responsible manner. The law is a critical step towards creating a more just and equitable workplace for all.

Explainability

Explainability is a critical component of the Algorithmic Accountability Act of 2023 (HR 32255). It ensures that employers provide workers with clear and concise explanations for AI-based decisions that affect their employment. This requirement is essential for several reasons.

First, explainability helps to build trust between employers and workers. When workers understand the reasons behind AI-based decisions, they are more likely to trust that the decisions are fair and unbiased. This trust is essential for maintaining a positive and productive work environment.

Second, explainability helps to identify and address potential biases in AI systems. By providing workers with explanations for AI-based decisions, employers can identify and address any biases that may be present in the system. This helps to ensure that AI systems are used in a fair and equitable manner.

Third, explainability helps to promote worker autonomy. When workers understand the reasons behind AI-based decisions, they are better able to make informed choices about their careers. This autonomy is essential for workers to thrive in the modern workplace.

There are many practical applications of explainability in the workplace. For example, an AI system used for hiring could provide explanations for why a particular candidate was selected for an interview. An AI system used for performance evaluation could provide explanations for why a particular employee received a certain rating. By providing these explanations, employers can help workers to understand the AI-based decisions that affect their employment.

The requirement for explainability in HR 32255 is a significant step towards ensuring that AI is used in a fair and responsible manner in the workplace. By providing workers with explanations for AI-based decisions, employers can build trust, identify and address biases, and promote worker autonomy.

Human Oversight

Human oversight is a critical component of the Algorithmic Accountability Act of 2023 (HR 32255). It ensures that employers maintain human oversight of AI systems to prevent unintended consequences. This requirement is essential for several reasons.

- Monitoring and Control: Human oversight provides a level of monitoring and control over AI systems, ensuring that they are used in a safe and responsible manner. Employers can monitor the performance of AI systems, identify any potential risks, and take corrective action as needed.

- Ethical Decision-Making: Human oversight ensures that AI systems are used in an ethical and responsible manner. Humans can make ethical decisions that AI systems may not be able to make, such as decisions involving fairness, equity, and justice.

- Accountability: Human oversight establishes clear lines of accountability for the use of AI systems. Employers are ultimately responsible for the actions of their AI systems, and human oversight helps to ensure that they are held accountable for any unintended consequences.

- Public Trust: Human oversight helps to build public trust in AI systems. When people know that there is human oversight of AI systems, they are more likely to trust that these systems are being used in a safe and responsible manner.

The requirement for human oversight in HR 32255 is a significant step towards ensuring that AI is used in a fair and responsible manner in the workplace. By maintaining human oversight of AI systems, employers can prevent unintended consequences, promote ethical decision-making, and build public trust in AI.

Privacy

The Algorithmic Accountability Act of 2023 (HR 32255) recognizes the importance of protecting the privacy of workers' data used in AI systems. This aspect of the law ensures that employers collect, use, and store workers' data in a responsible and ethical manner. By protecting workers' privacy, HR 32255 helps to build trust between employers and workers and promotes the ethical use of AI in the workplace.

- Data Collection: HR 32255 limits the collection of workers' data to what is necessary for the specific purpose of the AI system. Employers must obtain workers' consent before collecting their data and must provide clear and concise information about how the data will be used.

- Data Use: HR 32255 prohibits the use of workers' data for any purpose other than the specific purpose for which it was collected. Employers must not use workers' data to discriminate against them or to make decisions about their employment that are not based on job-related criteria.

- Data Storage: HR 32255 requires employers to store workers' data securely and to take steps to prevent unauthorized access to the data. Employers must also destroy workers' data when it is no longer needed.

- Worker Access: HR 32255 gives workers the right to access their own data and to request that their data be corrected or deleted. Workers can also opt out of having their data used in AI systems.

By protecting the privacy of workers' data, HR 32255 helps to ensure that AI is used in a fair and responsible manner in the workplace. The law protects workers from the potential risks of AI, such as discrimination and privacy. It also promotes the ethical use of AI by requiring employers to be transparent about how they collect, use, and store workers' data.

Accountability

Within the context of the Algorithmic Accountability Act of 2023 (HR 32255), accountability plays a crucial role in ensuring responsible and ethical use of AI in the workplace. By establishing clear accountability mechanisms, the law aims to hold employers liable for any AI-related harm or discrimination that may occur.

- Employer Responsibility: HR 32255 places the primary responsibility for AI-related actions on employers. They are obligated to oversee the development, deployment, and use of AI systems within their organizations.

- Transparency and Documentation: Employers are required to maintain comprehensive documentation on their AI systems, including their purpose, data sources, and decision-making processes. This transparency promotes accountability and enables scrutiny of AI-related decisions.

- Internal Oversight: HR 32255 encourages employers to establish internal oversight mechanisms, such as ethics boards or compliance committees, to review and monitor the use of AI systems. These bodies provide an additional layer of accountability within the organization.

- External Enforcement: The law empowers the Equal Employment Opportunity Commission (EEOC) to investigate and enforce complaints of AI-related discrimination. This external oversight ensures that employers are held accountable for any violations of HR 32255.

By establishing clear accountability mechanisms, HR 32255 fosters a culture of responsibility and ethical decision-making in the use of AI in the workplace. It provides a framework for employers to be held accountable for their AI-related actions and promotes the fair and equitable treatment of workers.

Enforcement

Within the framework of the Algorithmic Accountability Act of 2023 (HR 32255), enforcement plays a pivotal role in ensuring compliance and addressing potential violations. By empowering the Equal Employment Opportunity Commission (EEOC) to enforce the legislation and investigate complaints, HR 32255 establishes a robust mechanism for holding employers accountable for their use of AI in the workplace.

- Investigation Authority: The EEOC is granted the authority to investigate complaints of AI-related discrimination or violations of HR 32255. This includes examining employers' AI systems, data practices, and decision-making processes.

- Enforcement Actions: Upon finding violations, the EEOC can take appropriate enforcement actions, such as issuing cease-and-desist orders, imposing fines, or pursuing legal action against employers.

- Collaboration with Other Agencies: The EEOC may collaborate with other federal agencies, such as the Federal Trade Commission (FTC) or the Department of Justice (DOJ), to investigate and enforce HR 32255. This coordination enhances the effectiveness of enforcement efforts.

- Public Reporting: The EEOC is required to issue regular reports on its enforcement activities, including the number of complaints received, investigations conducted, and enforcement actions taken. This transparency promotes public awareness and accountability.

The enforcement provisions of HR 32255 provide a critical safeguard against the misuse of AI in the workplace. By empowering the EEOC to investigate and enforce the legislation, the law ensures that employers are held responsible for their actions and that workers have a recourse mechanism in case of AI-related discrimination or violations.

Stakeholder Engagement

The Algorithmic Accountability Act of 2023 (HR 32255) recognizes the critical importance of stakeholder engagement in the development and implementation of AI systems in the workplace. Stakeholder engagement ensures that the voices and perspectives of all those affected by AI are considered, leading to more inclusive, fair, and responsible AI systems.

Stakeholder engagement is a key component of HR 32255 because it helps to:

- Identify potential risks and biases: By consulting with workers and other stakeholders, employers can identify potential risks and biases in their AI systems before they are deployed. This helps to mitigate the negative impacts of AI and ensure that the systems are used in a fair and equitable manner.

- Build trust and acceptance: Stakeholder engagement helps to build trust and acceptance of AI systems among workers and other stakeholders. When people feel that they have been involved in the development and implementation of an AI system, they are more likely to trust and accept it.

- Promote innovation: Stakeholder engagement can also promote innovation by bringing together diverse perspectives and expertise. By involving workers and other stakeholders in the design process, employers can create AI systems that are more responsive to the needs of the workplace.

There are many practical applications of stakeholder engagement in the development and implementation of AI systems. For example, an employer could create a working group of employees, managers, and union representatives to provide input on the design of an AI system used for hiring. The working group could help to identify potential biases in the system and develop strategies to mitigate those biases.

Stakeholder engagement is an essential component of responsible AI development and implementation. By involving workers and other stakeholders in the process, employers can create AI systems that are fair, equitable, and beneficial to all.

HR 32255, also known as the Algorithmic Accountability Act of 2023, is a comprehensive piece of legislation that sets forth a framework for the responsible development, deployment, and use of AI systems in the workplace. Through its various provisions, including transparency, fairness, accuracy, explainability, human oversight, privacy, accountability, enforcement, and stakeholder engagement, HR 32255 aims to mitigate the potential risks and maximize the benefits of AI in the workplace. By promoting ethical AI practices, HR 32255 safeguards workers' rights, fosters trust and acceptance of AI systems, and encourages innovation.

The law serves as a reminder that responsible AI development requires a collaborative effort among policymakers, employers, workers, and other stakeholders. As AI continues to transform various aspects of work and life, HR 32255 provides a valuable roadmap for harnessing its potential while addressing its ethical implications. By encouraging ongoing dialogue, research, and policy development, we can shape a future where AI empowers individuals and contributes to a fair and equitable society.

- Celebrity Iou Season 4 Part 2 Is

- Peter Antonacci Obituary Florida Elections Security Chief

- Melissa Fumero Husband Who Is

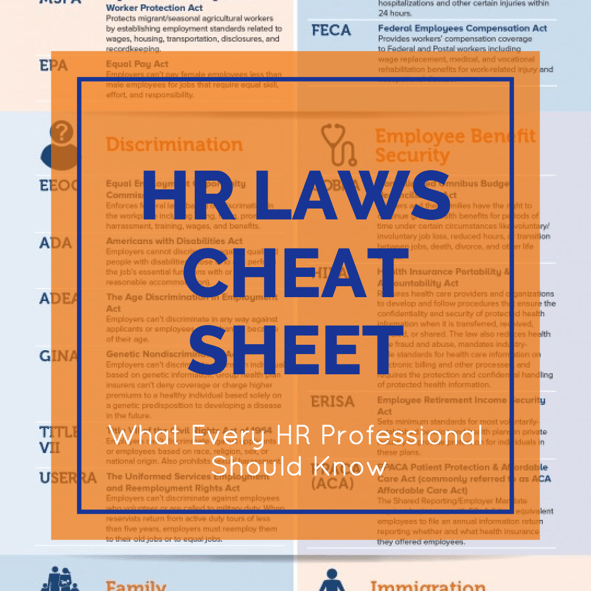

HR Laws Cheat Sheet Fuse Workforce Management

What Is HR Compliance? Definition, Checklist, Best Practices

What is HR 32255 Law in Upload, Explained